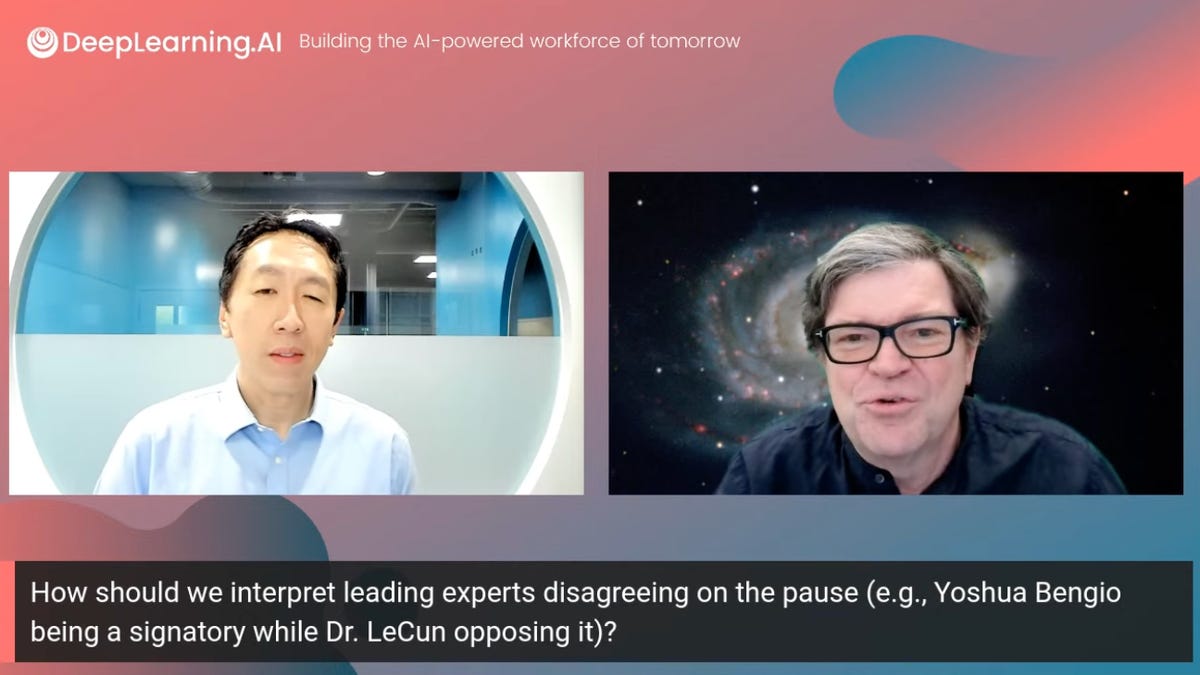

“Quite a few very sensible individuals signed on to this proposal, and I believe each Yann and I are involved with this proposal,” stated Andrew Ng, left, founder and CEO of utilized AI agency Touchdown.ai and AI instructional outfit DeepLearning.ai. He was joined by Yann LeCun, chief AI scientist for Meta Platforms.

DeepLearning.ai

Throughout a reside webcast on YouTube on Friday, Meta Platforms’s chief AI scientist, Yann LeCun, drew a line between regulating the science of AI and regulating merchandise, declaring that probably the most controversial of AI developments, OpenAI’s ChatGPT, represents a product, not primary R&D.

“After we’re speaking about GPT-4, or no matter OpenAI places out in the mean time, we’re not speaking about analysis and growth, we’re speaking about product growth, okay?” stated LeCun.

Additionally: Tech leaders signal petition to halt additional AI developments

“OpenAI pivoted from an AI analysis lab that was comparatively open, because the identify signifies, to a for-profit firm, and now a form of contract analysis lab largely for Microsoft that does not reveal something anymore about how they work, so that is product growth; this isn’t R&D.”

LeCun was holding a joint webcast with Andrew Ng, founder and CEO of the utilized AI agency Touchdown.ai, and the AI instructional outfit DeepLearning.ai. The 2 offered their argument in opposition to the six-month moratorium on AI testing that has been proposed by the Way forward for Life Institute and signed by numerous luminaries.

The proposal requires AI labs to pause — for a minimum of six months — the coaching of AI programs extra highly effective than OpenAI’s GPT-4, the newest of a number of so-called giant language fashions which are the inspiration for AI applications reminiscent of ChatGPT.

Additionally: With GPT-4, OpenAI opts for secrecy versus disclosure

A replay of the half-hour session might be considered on YouTube.

Each LeCun and Ng made the case that delaying analysis into AI could be dangerous as a result of it cuts off progress.

“I really feel like, whereas AI at the moment has some threat of hurt — I believe bias, equity, focus of energy, these are actual points — additionally it is creating actual worth, for training, for healthcare, is extremely thrilling, the worth so many individuals are creating to assist different individuals,” stated Ng.

“As wonderful as GPT-4 is at the moment, constructing one thing even higher than GPT-4 will assist all of those functions to assist lots of people,” continued Ng. “So, pausing that progress looks as if it could create a number of hurt and decelerate the creation of a number of very worthwhile stuff that might assist lots of people.”

Additionally: Find out how to use ChatGPT to create an app

LeCun concurred, whereas emphasizing a distinction between analysis and product growth.

“My first response to this, is, that calling for a delay in analysis and growth quantities to a brand new wave of obscurantism, primarily,” stated LeCun. “Why decelerate the progress of information and science?”

“Then there’s the query of merchandise,” continued LeCun. “I am all for regulating merchandise that get within the palms of individuals; I do not see the purpose of regulating R&D, I do not assume this serves any goal aside from lowering the information that we may use to truly make know-how higher, safer.”

LeCun referred to OpenAI’s resolution, with GPT-4, to dramatically restrict disclosure of how its program works, providing nearly nothing of technical worth within the formal analysis paper introducing this system to the world final month.

Additionally: GPT-4: A brand new capability for providing illicit recommendation and displaying ‘dangerous emergent behaviors’

“Partly, persons are sad about OpenAI being secretive now, as a result of a lot of the concepts which were utilized by them of their merchandise weren’t from them,” stated LeCun. “They have been concepts printed by individuals from Google, and FAIR [Facebook AI Research], and numerous different tutorial teams, and so on., and now they’re form of below lock and key.”

LeCun was aksed within the Q&A why his peer, Yoshua Bengio, head of Canada’s MILA institute for AI, has signed the moratorium letter. LeCun replied that Bengio is especially involved about openness in AI analysis. Certainly, Bengio has stated OpenAI’s flip to secrecy may have a chilling impact on basic analysis.

“I agree with him” concerning the want for openness in analysis, stated LeCun. “However we’re not speaking about analysis right here, we’re speaking about merchandise.”

Additionally: ‘Professional-innovation’ AI regulation proposal zeroes in on tech’s unpredictability

LeCun’s characterization of OpenAI’s work as merely product growth is in keeping with prior remarks that OpenAI has made no precise scientific breakthroughs. In January, he remarked that OpenAI’s ChatGPT is “not notably revolutionary.”

Throughout Friday’s session, LeCun predicted that free circulate of analysis will produce applications that can meet or exceed GPT-4 in capabilities.

“This isn’t going to final very lengthy, within the sense that there are going to be a number of different merchandise which have related capabilities, if not higher, inside comparatively brief order,” stated LeCun.

“OpenAI has a little bit of an advance due to the flywheel of knowledge that enables them to tune it, however this isn’t going to final.”