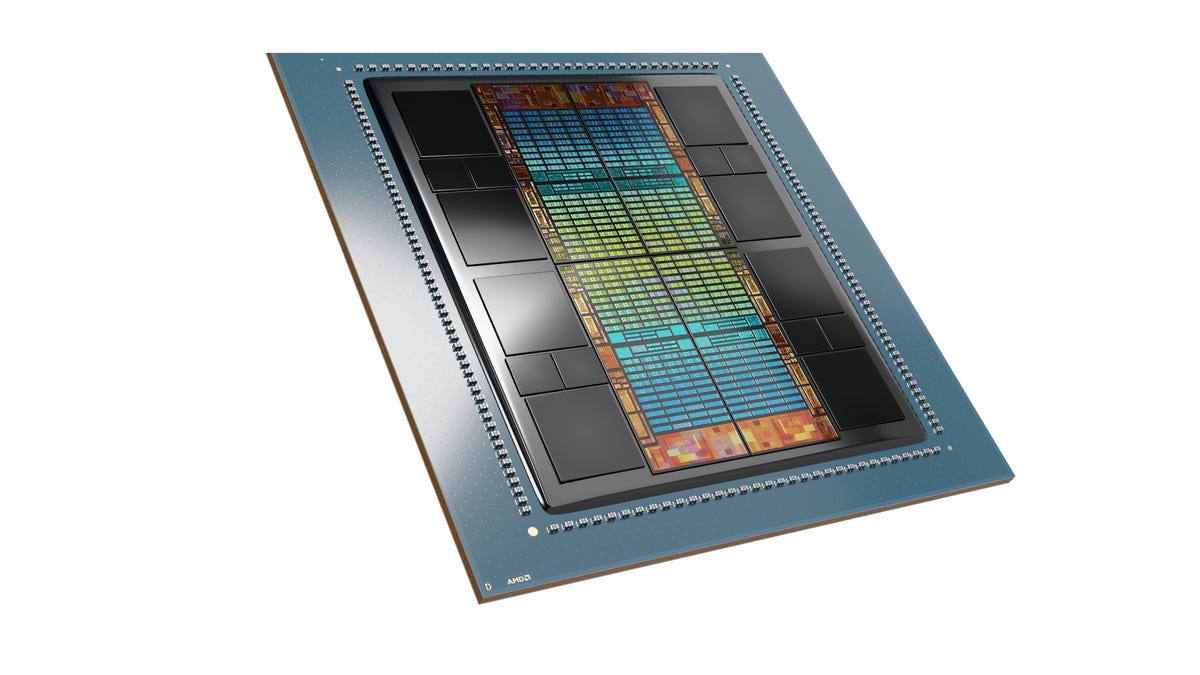

AMD’s Intuition MI300X GPU options a number of GPU “chiplets” plus 192 gigabytes of HBM3 DRAM reminiscence, and 5.2 terabytes per second of reminiscence bandwidth. The corporate mentioned it’s the solely chip that may deal with massive language fashions of as much as 80 billion parameters in reminiscence.

AMD

Superior Micro Gadgets CEO Lisa Su on Tuesday in San Francisco unveiled a chip that may be a centerpiece within the firm’s technique for synthetic intelligence computing, boasting its monumental reminiscence and knowledge throughput for so-called generative AI duties resembling massive language fashions.

The Intuition MI300X, because the half is understood, is a follow-on to the beforehand introduced MI300A. The chip is known as a mixture of a number of “chiplets,” particular person chips which might be joined collectively in a single bundle by shared reminiscence and networking hyperlinks.

Additionally: 5 methods to discover the usage of generative AI at work

Su, onstage for an invite-only viewers on the Fairmont Resort in downtown San Francisco, referred to the half as a “generative AI accelerator,” and mentioned the GPU chiplets contained in it, a household often known as CDNA 3, are “designed particularly for AI and HPC [high-performance computing] workloads.”

The MI300X is a “GPU-only” model of the half. The MI300A is a mix of three Zen4 CPU chiplets with a number of GPU chiplets. However within the MI300X, the CPUs are swapped out for 2 further CDNA 3 chiplets.

Additionally: Nvidia unveils new form of Ethernet for AI, Grace Hopper ‘Superchip’ in full manufacturing

The MI300X will increase the transistor depend from 146 billion transistors to 153 billion, and the shared DRAM reminiscence is boosted from 128 gigabytes within the MI300A to 192 gigabytes.

The reminiscence bandwidth is boosted from 800 gigabytes per second to five.2 terabytes per second.

“Our use of chiplets on this product could be very, very strategic,” mentioned Su, due to the flexibility to combine and match totally different sorts of compute, swapping out CPU or GPU.

Su mentioned the MI300X will supply 2.4 occasions the reminiscence density of Nvidia’s H100 “Hopper” GPU, and 1.6 occasions the reminiscence bandwidth.

AMD

“The generative AI, massive language fashions have modified the panorama,” mentioned Su. “The necessity for extra compute is rising exponentially, whether or not you are speaking about coaching or about inference.”

To exhibit the necessity for highly effective computing, Sue confirmed the half engaged on what she mentioned is the preferred massive language mannequin in the meanwhile, the open supply Falcon-40B. Language fashions require extra compute as they’re constructed with better and better numbers of what are known as neural community “parameters.” The Falcon-40B consists of 40 billion parameters.

Additionally: GPT-3.5 vs GPT-4: Is ChatGPT Plus price its subscription charge?

The MI300X, she mentioned, is the primary chip that’s highly effective sufficient to run a neural community of that measurement, solely in reminiscence, slightly than having to maneuver knowledge, back-and-forth to and from exterior reminiscence.

Su demonstrated the MI300X making a poem about San Francisco utilizing Falcon-40B.

“A single MI300X can run fashions as much as roughly 80 billion parameters” in reminiscence, she mentioned.

“Whenever you evaluate MI300X to the competitors, MI300X gives 2.4 occasions extra reminiscence, and 1.6 occasions extra reminiscence bandwidth, and with all of that further reminiscence capability, we even have a bonus for big language fashions as a result of we will run bigger fashions straight in reminiscence.”

Additionally: How ChatGPT can rewrite and enhance your present code

To have the ability to run all the mannequin in reminiscence, mentioned Su, implies that, “for the most important fashions, that truly reduces the variety of GPUs you want, considerably dashing up the efficiency, particularly for inference, in addition to lowering the whole value of possession.”

“I like this chip, by the way in which,” enthused Su. “We love this chip.”

AMD AMD

“With MI300X, you’ll be able to cut back the variety of GPUs, and as mannequin sizes continue to grow, it will develop into much more necessary.”

“With extra reminiscence, extra reminiscence bandwidth, and fewer GPUs wanted, we will run extra inference jobs per GPU than you possibly can earlier than,” mentioned Su. That can cut back the whole value of possession for big language fashions, she mentioned, making the expertise extra accessible.

Additionally: For AI’s ‘iPhone second’, Nvidia unveils a big language mannequin chip

To compete with Nvidia’s DGX methods, Su unveiled a household of AI computer systems, the “AMD Intuition Platform.” The primary occasion of that can mix eight of the MI300X with 1.5 terabytes of HMB3 reminiscence. The server conforms to the {industry} commonplace Open Compute Platform spec.

“For purchasers, they will use all this AI compute functionality in reminiscence in an industry-standard platform that drops proper into their present infrastructure,” mentioned Su.

AMD

Not like MI300X, which is simply a GPU, the present MI300A goes up in opposition to Nvidia’s Grace Hopper combo chip, which makes use of Nvidia’s Grace CPU and its Hopper GPU, which the corporate introduced final month is in full manufacturing.

MI300A is being constructed into the El Capitan supercomputer beneath building on the Division of Vitality’s Lawrence Livermore Nationwide Laboratories, famous Su.

Additionally: Easy methods to use ChatGPT to create an app

The MI300A is being proven as a pattern at present to AMD clients, and the MI300X will start sampling to clients within the third quarter of this yr, mentioned Su. Each can be in quantity manufacturing within the fourth quarter, she mentioned.

You’ll be able to watch a replay of the presentation on the Web site arrange by AMD for the information.