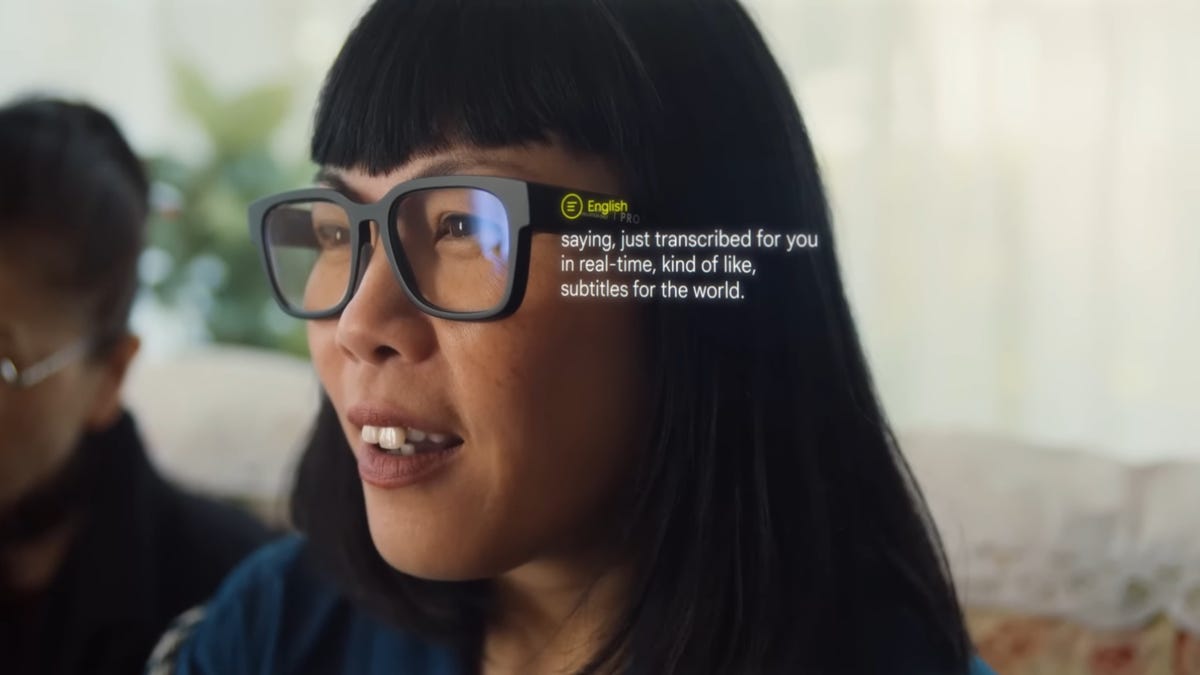

Precisely one yr in the past, Google unveiled a pair of augmented actuality (AR) glasses at its I/O developer convention. However in contrast to Google Glass, this new idea, which did not have a reputation on the time (and nonetheless would not), demonstrated the practicality of digital overlays, selling the concept of real-time language translation as you had been conversing with one other individual.

It wasn’t about taking pictures magic spells or seeing dancing cartoons however moderately offering accessibility to one thing all of us do day-after-day: speaking.

Additionally: How one can be part of the Google Search Labs waitlist to entry its new AI search engine

The idea had the looks of an everyday pair of glasses, making it clear that you did not have to appear to be a cyborg with a purpose to reap the advantages of at present’s expertise. However, once more, it was only a idea, and Google hasn’t actually talked concerning the product since then.

Twelve months have handed and the recognition of AR has now been changed by one other acronym: AI, shifting most of Google and the tech trade’s focus extra towards synthetic intelligence and machine studying and additional away from metaverses and, I assume, glasses that enable you to transcribe language in actual time. Google actually stated the phrase “AI” 143 occasions throughout yesterday’s I/O occasion, as counted by CNET.

Nevertheless it was additionally through the occasion that one thing else caught my eye. No, it wasn’t Sundar Pichai’s declaration that hotdogs are literally tacos however, as a substitute, a characteristic that Google briefly demoed with the brand new Pixel Fold. (The taco of smartphones? Nevermind.)

The corporate calls it Twin Display screen Interpreter Mode, a transcription characteristic that leverages the back and front screens of the foldable and the Tensor G2’s processing energy to concurrently show what’s being spoken by one individual and the way it interprets in one other language. At a look, you are capable of perceive what another person is saying, even when they do not communicate the identical language as you. Sound acquainted?

I am not saying a foldable telephone is a direct alternative for AR glasses; I nonetheless imagine there is a future the place the latter exists and probably replaces all of the units we feature round. However the Twin Display screen Interpreter Mode on the Pixel Fold is the closest callback we have gotten to Google’s year-old idea, and I am excited to check the characteristic when it arrives.

Additionally: All of the {hardware} Google introduced at I/O 2023 (and sure, there is a foldable)

The Pixel Fold is out there for pre-order proper now, and Google says it is going to begin transport by subsequent month. However even then, you may have to attend till the autumn earlier than the interpretation characteristic sees an official launch, so keep tuned.