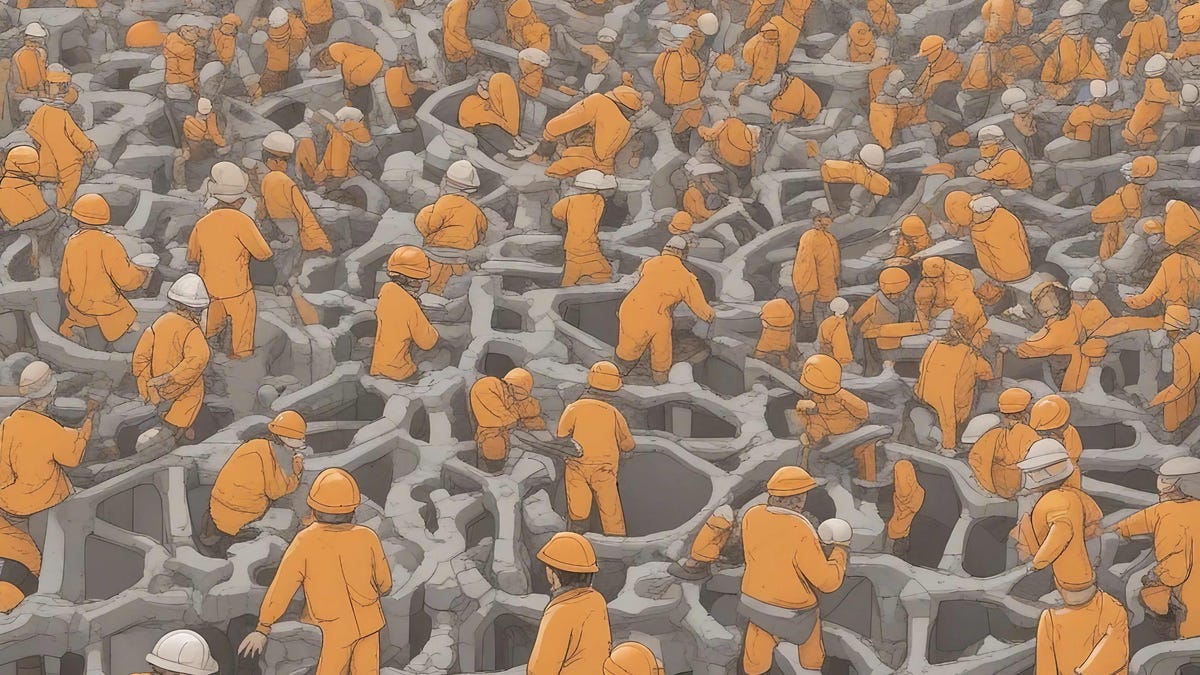

Scientists are discovering that extra will be achieved by eradicating three-quarters of a neural internet.

IST Austria

A serious pursuit within the science of synthetic intelligence (AI) is the stability between how large a program is and the way a lot knowledge it makes use of. In spite of everything, it prices actual cash, tens of tens of millions of {dollars}, to purchase Nvidia GPU chips to run AI, and to assemble billions of bytes of knowledge to coach neural networks — and the way a lot you want is a query with very sensible implications.

Google’s DeepMind unit final yr codified the precise stability between computing energy and coaching knowledge as a type of regulation of AI. That rule of thumb, which has come to be known as “The Chinchilla Regulation”, says you possibly can scale back the scale of a program to only a quarter of its preliminary dimension in case you additionally improve the quantity of knowledge it is educated on by 4 occasions the preliminary dimension.

Additionally: Can generative AI remedy pc science’s biggest unsolved downside?

The purpose of Chinchilla, and it is an necessary one, is that packages can obtain an optimum outcome when it comes to accuracy whereas being much less gigantic. Construct smaller packages, however prepare for longer on the information, says Chinchilla. Much less is extra, in different phrases, in deep-learning AI, for causes not but fully understood.

In a paper printed this month, DeepMind and its collaborators construct upon that perception by suggesting it is potential to do even higher by stripping away entire components of the neural community, pushing efficiency additional as soon as a neural internet has hit a wall.

Additionally: Generative AI will far surpass what ChatGPT can do. This is why

In line with lead creator Elias Frantar of Austria’s Institute of Science and Expertise, and collaborators at DeepMind, you may get the identical ends in time period of accuracy from a neural community that is half the scale of one other in case you make use of a way known as “sparsity”.

Sparsity, an obscure component of neural networks that has been studied for years, is a way that borrows from the precise construction of human neurons. Sparsity refers to turning off a number of the connections between neurons. In human brains, these connections are referred to as synapses.

The overwhelming majority of human synapses do not join. As scientist Torsten Hoefler and crew on the ETH Zurich noticed in 2021, “Organic brains, particularly the human mind, are hierarchical, sparse, and recurrent constructions,” including, “the extra neurons a mind has, the sparser it will get.”

The pondering goes that in case you might approximate that pure phenomenon of the very small variety of connections, you could possibly do much more with any neural internet with so much much less effort — and so much much less time, cash, and vitality.

Additionally: Microsoft, TikTok give generative AI a form of reminiscence

In a synthetic neural community, equivalent to a deep-learning AI mannequin, the equal of synaptic connections are “weights” or “parameters”. Synapses that do not have connections could be weights which have zero values — they do not compute something, so they do not take up any computing vitality. AI scientists check with sparsity, subsequently, as zeroing-out the parameters of a neural internet.

Within the new DeepMind paper, posted on the arXiv pre-print server, Frantar and crew ask, if smaller networks can equal the work of bigger networks, because the prior examine confirmed, how a lot can sparsity assist push efficiency even additional by eradicating some weights?

The researchers uncover that in case you zero out three-quarters of the parameters of a neural internet — making it extra sparse — it may well do the identical work as a neural internet over two occasions its dimension.

As they put it: “The important thing take-away from these outcomes is that as one trains considerably longer than Chinchilla (dense compute optimum), increasingly more sparse fashions begin to grow to be optimum when it comes to loss for a similar variety of non-zero parameters.” The time period “dense compute mannequin” refers to a neural internet that has no sparsity, so that every one its synapses are working.

“It is because the positive factors of additional coaching dense fashions begin to decelerate considerably sooner or later, permitting sparse fashions to overhaul them.” In different phrases, regular, non-sparse fashions — dense fashions — begin to break down the place sparse variations take over.

Additionally: We are going to see a very new sort of pc, says AI pioneer Geoff Hinton

The sensible implication of this analysis is putting. When a neural community begins to achieve its restrict when it comes to efficiency, truly lowering the quantity of its neural parameters that perform — zeroing them out — will prolong the neural internet’s efficiency additional as you prepare the neural internet for an extended and longer time.

“Optimum sparsity ranges constantly improve with longer coaching,” write Frantar and crew. “Sparsity thus gives a way to additional enhance mannequin efficiency for a set remaining parameter value.”

For a world nervous in regards to the vitality value of more and more power-hungry neural nets, the excellent news is that scientists are discovering much more will be performed with much less.