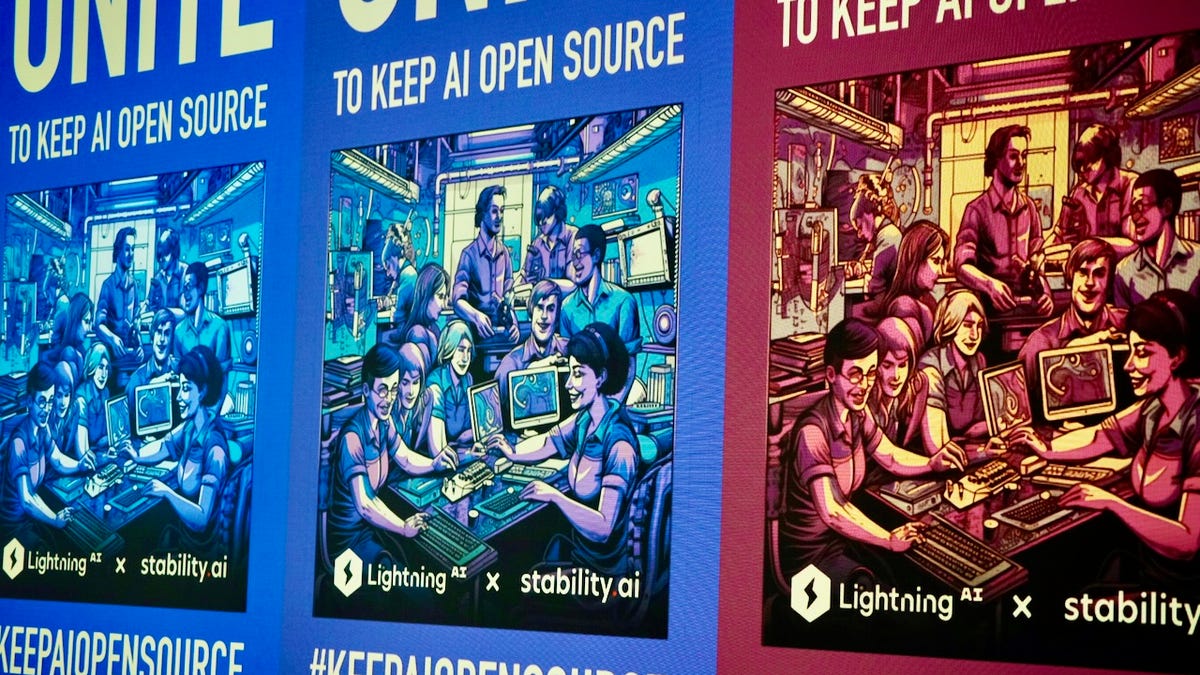

Stabiilty.ai + Lightning.ai

In a manner, open supply and synthetic intelligence had been born collectively.

Again in 1971, when you’d talked about AI to most individuals, they may have considered Isaac Asimov’s Three Legal guidelines of Robotics. Nevertheless, AI was already an actual topic that 12 months at MIT, the place Richard M. Stallman (RMS) joined MIT’s Synthetic Intelligence Lab. Years later, as proprietary software program sprang up, RMS developed the unconventional concept of Free Software program. Many years later, this idea, reworked into open supply, would change into the birthplace of contemporary AI.

Additionally: The very best AI chatbots: ChatGPT and options

It wasn’t a science-fiction author however a pc scientist, Alan Turing, who began the fashionable AI motion. Turing’s 1950 paper Computing Machine and Intelligence originated the Turing Take a look at. The check, in short, states that if a machine can idiot you into considering that you simply’re speaking with a human being, it is clever.

In line with some folks, in the present day’s AIs can already do that. I do not agree, however we’re clearly on our manner.

In 1960, pc scientist John McCarthy coined the time period “synthetic intelligence” and, alongside the way in which, created the Lisp language. McCarthy’s achievement, as pc scientist Paul Graham put it, “did for programming one thing like what Euclid did for geometry. He confirmed how, given a handful of straightforward operators and a notation for capabilities, you’ll be able to construct a complete programming language.”

Lisp, during which information and code are blended, turned AI’s first language. It was additionally RMS’s first programming love.

Additionally: My two favourite ChatGPT Plus plugins and the outstanding issues I can do with them

So, why did not we now have a GNU-ChatGPT within the Eighties? There are lots of theories. The one I favor is that early AI had the fitting concepts within the mistaken decade. The {hardware} wasn’t as much as the problem. Different important parts — like Massive Knowledge — weren’t but out there to assist actual AI get underway. Open-source tasks comparable to Hdoop, Spark, and Cassandra offered the instruments that AI and machine studying wanted for storing and processing massive quantities of information on clusters of machines. With out this information and fast entry to it, Massive Language Fashions (LLMs) could not work.

At this time, even Invoice Gates — no fan of open supply — admits that open-source-based AI is the largest factor since he was launched to the concept of a graphical person interface (GUI) in 1980. From that GUI concept, it’s possible you’ll recall, Gates constructed a bit of program referred to as Home windows.

Additionally: The very best AI picture mills to strive

Particularly, in the present day’s wildly well-liked AI generative fashions, comparable to ChatGPT and Llama 2, sprang from open-source origins. That is to not say ChatGPT, Llama 2, or DALL-E are open supply. They don’t seem to be.

Oh, they had been speculated to be. As Elon Musk, an early OpenAI investor, stated: “OpenAI was created as an open supply (which is why I named it “Open” AI), non-profit firm to function a counterweight to Google, however now it has change into a closed supply, maximum-profit firm successfully managed by Microsoft. Not what I supposed in any respect.”

Be that as it might, OpenAI and all the opposite generative AI applications are constructed on open-source foundations. Particularly, Hugging Face’s Transformer is the highest open-source library for constructing in the present day’s machine studying (ML) fashions. Humorous title and all, it supplies pre-trained fashions, architectures, and instruments for pure language processing duties. This allows builders to construct upon present fashions and fine-tune them for particular use instances. Particularly, ChatGPT depends on Hugging Face’s library for its GPT LLMs. With out Transformer, there is not any ChatGPT.

Additionally: Need to construct your individual AI chatbot? Say hi there to open-source HuggingChat

As well as, TensorFlow and PyTorch, developed by Google and Fb, respectively, fueled ChatGPT. These Python frameworks present important instruments and libraries for constructing and coaching deep studying fashions. For sure, different open-source AI/ML applications are constructed on prime of them. For instance, Keras, a high-level TensorFlow API, is usually utilized by builders with out deep studying backgrounds to construct neural networks.

You may argue till you are blue within the face as to which one is healthier — and AI programmers do — however each TensorFlow and PyTorch are utilized in a number of tasks. Behind the scenes of your favourite AI chatbot is a mixture of totally different open-source tasks.

Some top-level applications, comparable to Meta’s Llama-2, declare that they are open supply. They don’t seem to be. Though many open-source programmers have turned to Llama as a result of it is about as open-source pleasant as any of the massive AI applications, when push involves shove, Llama-2 is not open supply. True, you’ll be able to obtain it and use it. With mannequin weights and beginning code for the pre-trained mannequin and conversational fine-tuned variations, it is simple to construct Llama-powered functions. There’s just one tiny downside buried within the licensing: In case your program is wildly profitable and you’ve got

larger than 700 million month-to-month energetic customers within the previous calendar month, you have to request a license from Meta, which Meta could grant to you in its sole discretion, and you aren’t approved to train any of the rights beneath this Settlement until or till Meta in any other case expressly grants you such rights.

You can provide up any desires you might need of changing into a billionaire by writing Digital Lady/Boy Pal primarily based on Llama. Mark Zuckerberg will thanks for serving to him to a different few billion.

Additionally: AI is lots like streaming. The add-ons add up quick

Now, there do exist some true open-source LLMs — comparable to Falcon180B. Nevertheless, almost all the foremost business LLMs aren’t correctly open supply. Thoughts you, all the foremost LLMs had been educated on open information. As an example, GPT-4 and most different massive LLMs get a few of their information from CommonCrawl, a textual content archive that incorporates petabytes of information crawled from the net. If you happen to’ve written one thing on a public website — a birthday want on Fb, a Reddit touch upon Linux, a Wikipedia point out, or a e-book on Archives.org — if it was written in HTML, chances are high your information is in there someplace.

So, is open supply doomed to be at all times a bridesmaid, by no means a bride within the AI enterprise? Not so quick.

In a leaked inner Google doc, a Google AI engineer wrote, “The uncomfortable fact is, we aren’t positioned to win this [Generative AI] arms race, and neither is OpenAI. Whereas we have been squabbling, a 3rd faction has been quietly consuming our lunch.”

That third participant? The open-source neighborhood.

Because it seems, you do not want hyperscale clouds or 1000’s of high-end GPUs to get helpful solutions out of generative AI. Actually, you’ll be able to run LLMs on a smartphone: Individuals are operating basis fashions on a Pixel 6 at 5 LLM tokens per second. You can even finetune a personalised AI in your laptop computer in a night. When you’ll be able to “personalize a language mannequin in a number of hours on shopper {hardware},” the engineer famous, “[it’s] a giant deal.” That is for certain.

Additionally: The ethics of generative AI: How we are able to harness this highly effective expertise

Because of fine-tuning mechanisms, such because the Hugging Face open-source low-rank adaptation (LoRA), you’ll be able to carry out mannequin fine-tuning for a fraction of the fee and time of different strategies. How a lot of a fraction? How does personalizing a language mannequin in a number of hours on shopper {hardware} sound to you?

The Google developer added:

“A part of what makes LoRA so efficient is that — like different types of fine-tuning — it is stackable. Enhancements like instruction tuning might be utilized after which leveraged as different contributors add on dialogue, or reasoning, or instrument use. Whereas the person advantageous tunings are low rank, their sum needn’t be, permitting full-rank updates to the mannequin to build up over time. Because of this as new and higher datasets and duties change into out there, the mannequin might be cheaply saved updated with out ever having to pay the price of a full run.”

Our thriller programmer concluded, “Straight competing with open supply is a dropping proposition.… We should always not anticipate to have the ability to catch up. The trendy web runs on open supply for a purpose. Open supply has some vital benefits that we can’t replicate.”

Additionally: Extending ChatGPT: Can AI chatbot plugins actually change the sport?

Thirty years in the past, nobody dreamed that an open-source working system may ever usurp proprietary programs like Unix and Home windows. Maybe it is going to take lots lower than three many years for a very open, soup-to-nuts AI program to overwhelm the semi-proprietary applications we’re utilizing in the present day.